A log generated when a withdrawal request occurs within the game. It stores information regarding the user’s withdrawal request and completion.

Hive Analytics Default Creation Table

- By default, the tables listed below are provided when using Analytics.

- If you require a table other than the preset one, refer to [Log Definition] to create one.

- The table name is “t_[log name]“.

- The basic creation table is separated into two sections: basic logs collected directly from the platform, and game logs that must be transmitted from the game server.

- Click on the log name to view field information for each table.

Log Name Table Descriptions Log occurred time hive_purchase_log A table that contains user purchase activity and payment information generated by the billing function of Hive When receipt verification is concluded following a user’s purchase of an in-game product via Hive billing hive_sub_purchase_log A table that contains subscription product purchase data and payment details generated by the Hive billing function When the subscription receipt is verified after the user purchases an in-game subscription product through Hive invoicing hive_login_log A table that contains the login information of users who have successfully authenticated with Hive When logging in after user authentication via the Hive authentication function has been completed hive_quit_log A log generated when a withdrawal request occurs within the game. It stores information regarding the user’s withdrawal request and completion. The point at which a user requests a withdrawal or when a withdrawal is completed within the game. hive_singular_install A table that stores attribution information for various properties via the Analytics-provided Singular-specific endpoint URL App installation and execution, event occurrence, app session beginning and ending, and time of link click hive_adjust_fix_log A table that stores attribution information for various properties via the URL provided by Analytics for the Adjust endpoint. App installation and execution, event occurrence, app session beginning and ending, and time of link click hive_appsflyer_log A table that stores attribution data for various properties accessible via the Appsflyer-specific endpoint URL provided by Analytics App installation and execution, event occurrence, app session beginning and ending, and time of link click hive_user_entry_log A table that stores information about each section until the user reaches the game interface after launching the game Time to access each section, including new installation, terms and conditions agreement, additional download, login, etc hive_au_download_log A table that stores download information when a game is downloaded through the Hive provisioning function after the terms and conditions agreement interface When to agree to the terms after downloading hive_concurrent_user_log A table receives and stores connection information from clients using the Hive SDK every two minutes

※When the ‘Minimize Network Communication’ option is activated in the Hive console, collection does not occur.

Logs are sent every 2 minutes following login. hive_promotion_install_log Promotional installation data collected when using the Hive promotional feature When the SDK is initialized after clicking the cross campaign hive_promotion_click_cross_log Cross-click promotion records gathered when using the Hive promotion function When a user clicks the download icon on a cross banner, offer wall, or UA invitation page hive_promotion_cpi_v2_log Promotion CPI V2 log gathered when the Hive promotion feature is employed When the SDK is initialized after selecting the cross-campaign button (limited to the initial installation) hive_promotion_open_log Promotion open log gathered when the Hive promotion function is utilized. Promotion banner and offer wall special exposure time hive_promotion_info Promotional information collected when using the Hive promotional feature (Hive Console > Promotion) Modification and creation time for campaigns hive_ad_watch_log Ad viewing log made available via Hive AdKit when using AD(X) or ADOP Ad loading, viewing beginning, incentive, and closing time hive_push_open_log A table that stores information about when a user opened a push notification when using the Hive notification feature When a user enters the game after clicking a campaign push notification and entering the game hive_push_send_stat_log When using the Hive notification function, a table containing statistical information about campaigns sent from D-2 on push events after push sending is created and maintained After compiling information on sent campaigns from D-2 pub_user_property_log Custom user attribute table that, in addition to pre-defined attributes, arbitrarily defines attribute values using data collected from the Hive SDK

(Logs must be transmitted directly using the function for client log transmission)When the customer-defined attribute occurs pub_mate_log Mate log

(Logs must be transmitted directly using the function for client log transmission)When mate acquisition and consumption changes occur pub_levelup_log Level-up log

(Logs must be transmitted directly using the function for client log transmission)When to level up a user account or character in the game pub_asset_log Currency log

(Logs must be transmitted directly using the function for client log transmission)When to purchase or ingest products pub_store_click_log Store click log

(Logs must be transmitted directly using the function for client log transmission)When a click for product details or a purchase occurs pub_society_log Social activity log

(Logs must be transmitted directly using the function for client log transmission)When changes such as inviting, joining, or adding social activities such as guilds, acquaintances, parties, etc. take place pub_contents_log Content logs

(Logs must be transmitted directly using the function for client log transmission)The status time changes when in-game content (quests, dungeons, stages, etc.) is accepted, failed, canceled, or completed pub_device_info Table that captures information about user devices via Hive SDK

(Logs must be transmitted directly using the function for client log transmission)When to initialize, log in, or change Hive SDK settings

Log Definition

- Log definition is a function for transferring game logs to Hive Analytics v2.

-

- Define the logs that you want to transfer in the game from the backoffice. The designated log will be collected once you apply the created source code on the game after defining the log

- A feature where you can check whether the log has been defined correctly or not and whether the log is being collected properly or not will be provided in the backoffice.

- Backoffice route: Analytics > Log Definition

-

- Analytics’ log definition function is an important function that defines which fields to send before sending logs.

- Analytics creates basic fields when defining a log by dividing the log definition function into two types: fields that are essential when defining a log and fields that are used to use the function of Analytics.

- Additional fields can be created and used according to user needs.

- Required fields for log definition

- The table below details the required fields for Analytics log definitions.

- These fields are routinely collected when using the Hive SDK client log transfer feature.

Category Field Name Description Data Type Example Base Time

dateTime Log generation time

Format: “YYYY-MM-DD hh:mm:ss”* dateTime values that fall outside the range of -4 years to +1 year from the current time are treated as exceptions. Such logs are stored individually, and they become accessible for verification two days after the date of storageTIMESTAMP 2023-07-07 15:30:00 timezone UTC offset of the time parameter value in the log * Transmission precautions

– Dynamically sets and transmits the timezone value based on the dateTime valueSTRING GMT+9:00 Game Classification

appId Application ID for each registered game in App Center – When sending as app_id, change to appId and save

STRING com.com2us.ios.universal appIndex Index number assigned to the appId * Replacement

INTEGER 123456 gameId Format that includes the domain and game name in the appId. * Replacement

STRING com.com2us.gamename gameIndex Index number assigned to the game * Replacement

INTEGER 123456 Log Name

category Log name specified during log definition STRING hive_login_log Log Identifier

guid Used to remove duplicates from logs with a unique key value and one attribute row.

(Even if the values of the remaining fields are identical, it is recognized as a different log if the guid value is distinct.)* Transmission precautions

– It is advised to transmit as a random string such as UUIDSTRING 61ad27eaad7ae3d1521d Other undefinedRawData If there are elements that are not defined in the log definition, they are saved as JSON strings STRING {

“tier” : “10”,

“fluentd_tag” : “ha2union.game.purchase_log“,

“country” : “HK”

}Replacement) If appId cannot be added, even if there is only one log, it can replace the game classification value. If appId can be added, it is not included in the required field.

Information about appIndex, gameId, and gameIndex can be found on the AppId list in the App Center.

- Fields for use with analytic features

- The table below displays the elements required to use various analytics functions, such as game-specific indicators and Analytics-provided segments.

- Analytics must be established in order to utilize its functions.

- These fields are routinely collected when using the Hive SDK client log transfer feature.

Field Name Description Data Type Example vid User Virtual ID generated for each game INTEGER 100967777206 did Device ID uniquely generated in the terminal INTEGER 51726905112 playerId Key used in Hive integrated authentication INTEGER 100967777106 serverId Unique ID information to identify the server where the game is served STRING GLOBAL market Store/platform information where the game is serviced STRING GO bigqueryRegistTimestamp Time when customer-submitted logs entered the BigQueryID table TIMESTAMP 2023-08-22 03:34:08 UTC lang Language information in which the game is served STRING EN appIdGroup Game ID for each game registered in App Center STRING com.com2us.testgame checksum Standard column to check duplicate data if guid is incorrect * Transmission precautions

– Combine all column names and column values except guid into a string and send as md5 value.STRING 20ed52852cb2f72 country Country information where the game is served STRING US company Company information that services the game STRING com2us

Defining a Log

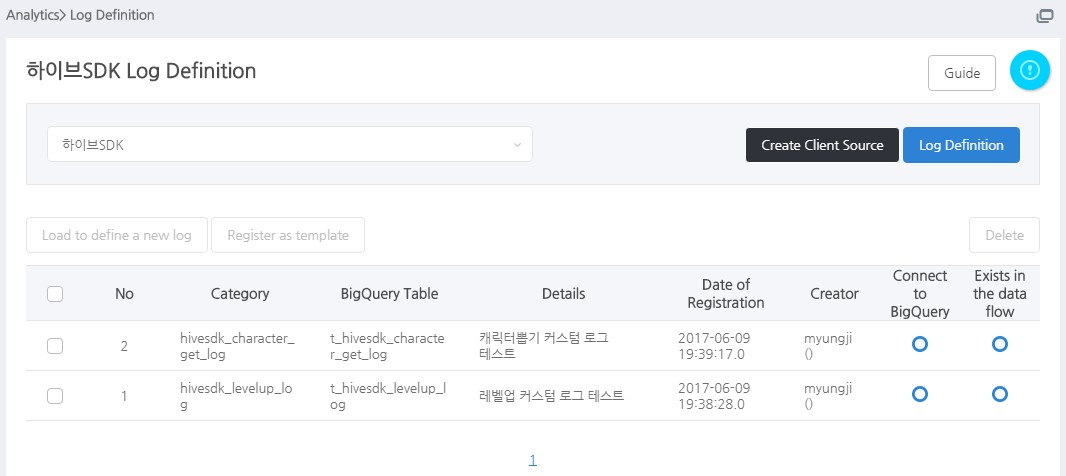

Log Definition List

- You can define a new log in the log definition list and also check the log status of the previously defined logs.

- Create Client Source: Generate a client source code to apply to the game corresponding to the entire log list that you defined.

- Log Definition: Define a new log.

- Load to define a new log: You can use this feature after selecting one of the logs that you defined previously and you’ll be moved to the screen where you copy and define the designated log definition details.

- Register as template: You can select logs that are frequently used among previously defined logs and register them as a template. Registered templates can be loaded in the log definition screen and used.

- Connect to BigQueryID: It means that the log data storage has been created correctly.

- Exists in the data flow: For newly defined logs, it’ll be shown as “x”. You must check if it’s changed to “O” on the following morning before using it. However, you can collect logs on the day you defined a new log. If you see “X” on the day other than the day that you’ve defined the log, please contact the following.

- If there’s “X” displayed for “Connect to BigQueryID“ and “Exists in the data flow”, that means that there’s a problem in syncing the data and you must contact the Data Technology Team (DW Part).

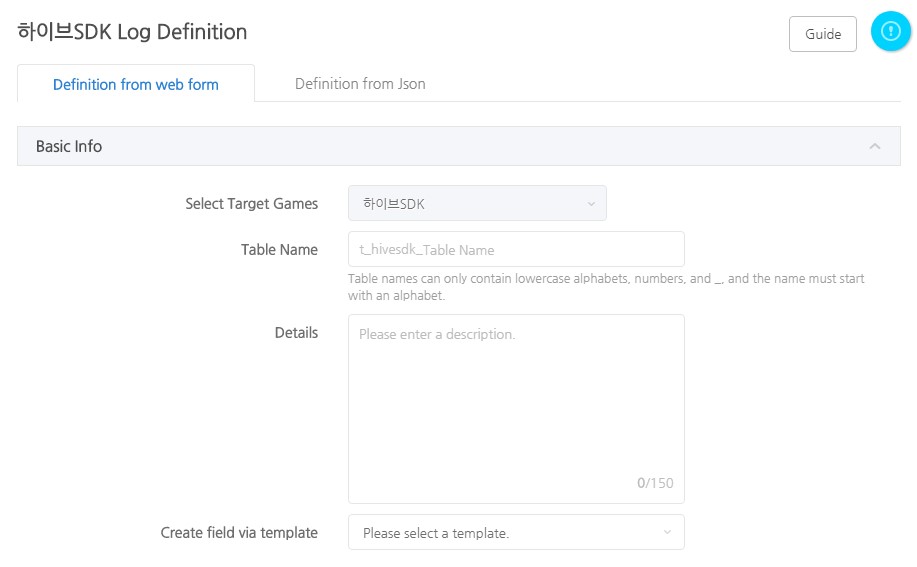

Defining a Log with Web Form

- Basic Information

- Table Name: Enter the table name of the corresponding log.

- Details: Enter details of the corresponding table. This will be exposed in the game information field of the segment, so it is highly recommended that you enter descriptions that users can easily understand. (Ex: Level – User’s account level)

- Create field via template: If you select one of the previous registered templates, the defined info of the corresponding log will be exposed and you can proceed with log definition by modifying parts that requires modification.

- Common Field Settings

- Common Field is a predefined field where fields that can be used for all logs are defined early ahead.

- If you check the Select Data Checkbox, the field will become the field used in this log. You’ll still be able to collect logs even if you don’t check as long as it’s transferred in the game. However, you’ll be able to use it more conveniently since a server source code will be created automatically if you check. It’ll be collected from Hive SDK 4 even if you don’t check the client.

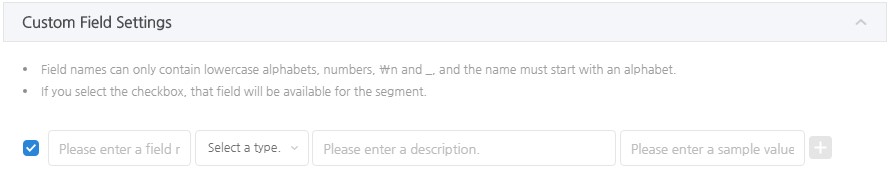

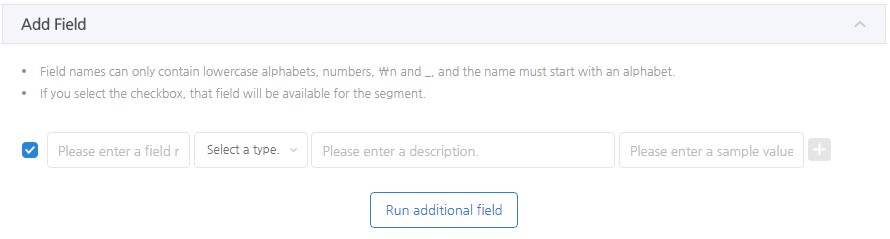

- Definition Field Settings

- Definition Field is a part where you set up the game log to be transferred. You need to enter the field name, type, description and sample value.

- Check the checkbox and the field will become a recommended field and defined as a field that can be used for the segment.

- Tap the Register Button to complete the log definition. The log will be added to the list once you finish with the log definition, and you’ll be able to add or modify fields and check the collected data in the details page.

Defining Logs with Excel

- Create Excel File

- Enter a table according to the following format.

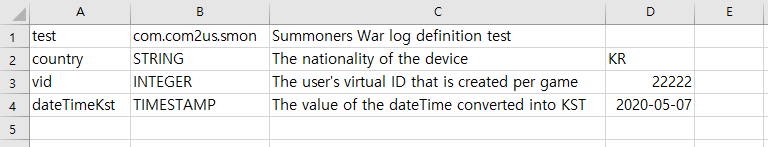

Table Name First part of the Appid Table Description Field Name 1 Field 1 Type Description on Field 1 Sample value for Field 1 Field Name 2 Field 2 Type Description on Field 2 Sample value for Field 2 - For instance, you can create a table as below.

- You can add a sheet to the excel file to define multiple logs. However, you can only define logs for the same app (game). The sheet name can be anything.

- Enter a table according to the following format.

- Upload Excel File

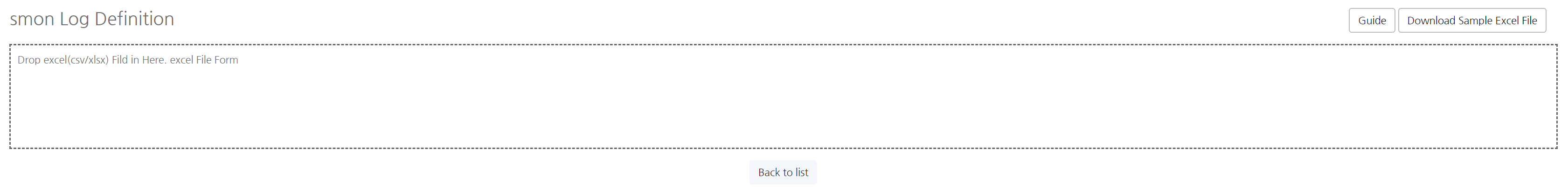

- Click “Define as Excel File” on the log definition list to move to the page shown as below.

- Drag the excel file created in #1 to the square box and click “Register”.

- Click “Define as Excel File” on the log definition list to move to the page shown as below.

Managing a Log

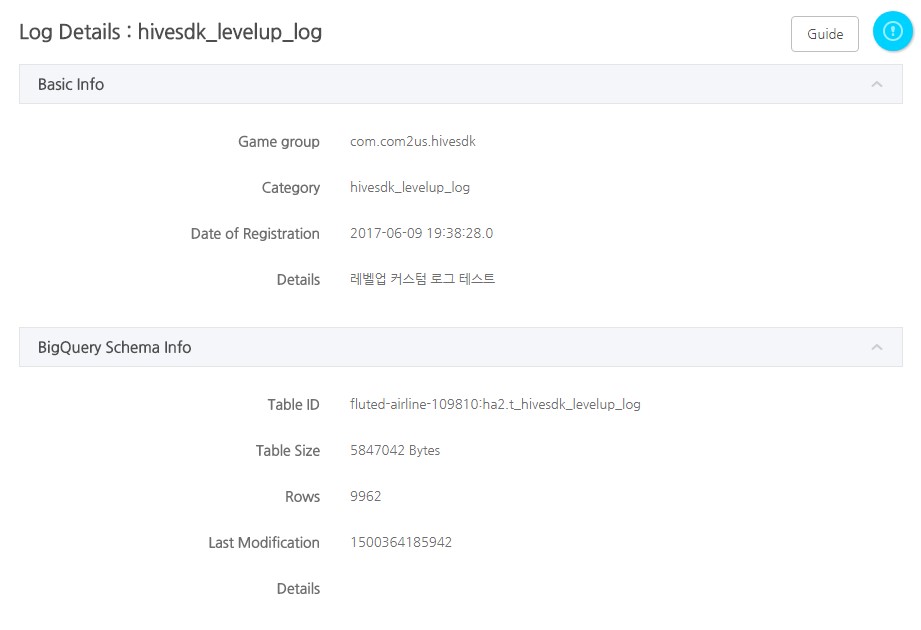

Defined Log Detail Page

- Click on the log and move to the detail page to add a field to the corresponding log from the log definition list or whether to check the log transfer status is correct or not.

- Basic Information, BigQuery Schema Information

- Basic Information: You can check the game with defined logs, category, date of registration and details. You can also change the details.

- BigQuery Schema Information: You can view the schema information saved to the BigQuery.

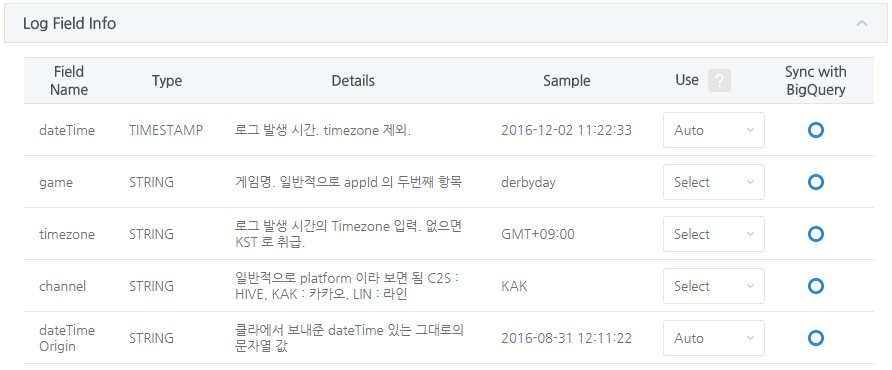

- Log Field Information

- Common Fields will be shown in black while fields added from the game will be shown in blue.

- You can modify the field details and “Use” category.

- Use: You can select the use of the corresponding field. It will be used as a reference for the person defining the log and it should be applied correctly according to the use when developing the log transmission. Fields set as “Recommended” and “Required” can be used in the segment.

- Required: Refers to the field that must to be collected. You must include the value of the corresponding field to the game. (Ex: Vid field)

- Recommended: Refers to the field that’s highly important. It’s a field that’s highly recommended to collect. You can add “Recommended” and “Required” fields as a condition for the segment.

- Auto: Refers to the field that’s required for the log collection system and it’s generated automatically. (Ex: A field that collects the country value via the client IP with the geoIpCountry field.)

- Select: Refers to the field that’s collected but optional to use. You don’t have to send this field when transferring the log from the server.

- Sync with BigQueryID: You can check if it’s synced correctly with the BigQuery. You’ll see “O” if it’s synced correctly and “X” if there’s an issue with syncing the log. Please contact the Data Technology Team (DW Part) if you see “X”.

- You can change the order of fields by dragging the Field Info table rows.

- Add Field

- Add Field: It’s a feature where you can add a field to the log that you’ve defined previously. You can add a field in the same way as you did for the log definition.

- Enter details for the field to add and click on the Run Additional Field Button to save.

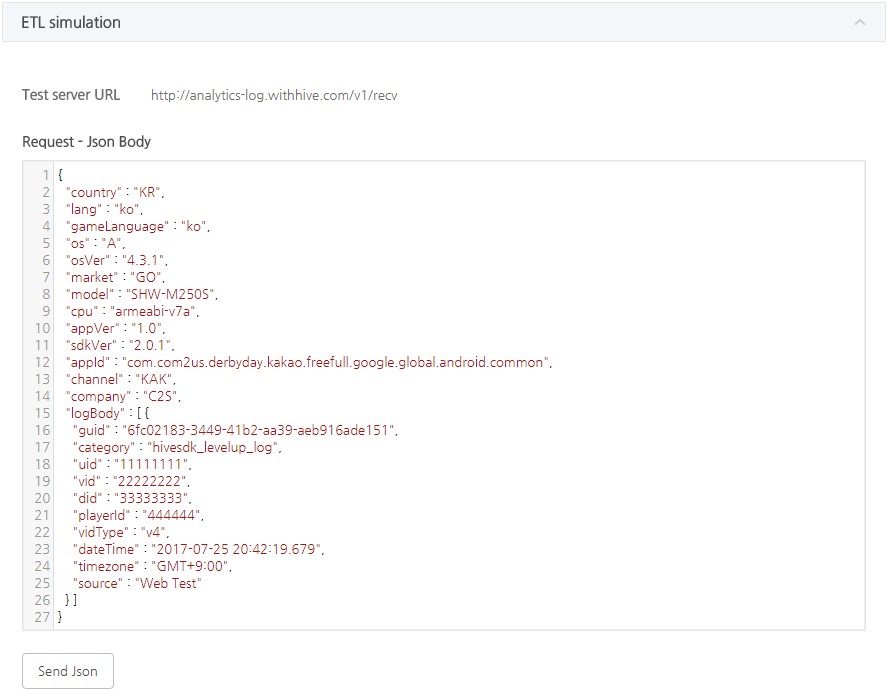

- ETL Simulation

- ETL Simulation is a feature where you can check whether the corresponding log definition is being collected correctly or not.

- Request – Json Body

- You can check the sample Json of the corresponding log and click on the Send Json Button to check if you get a correct response.

- Result values will be exposed in the Response Area below when you click on the Send Json Button. Also, the collection status will be shown in stages at the Json data storage server path.

- Click on the Json data storage server path buttons and the status value will be shown. You’ll be able to check if the data is securely saved by clicking on the BigQuery Button and the Json data saved the last will be displayed.

- Please note that the sample data collected with this feature are actually saved to the DB and there may be a difference due to the log.

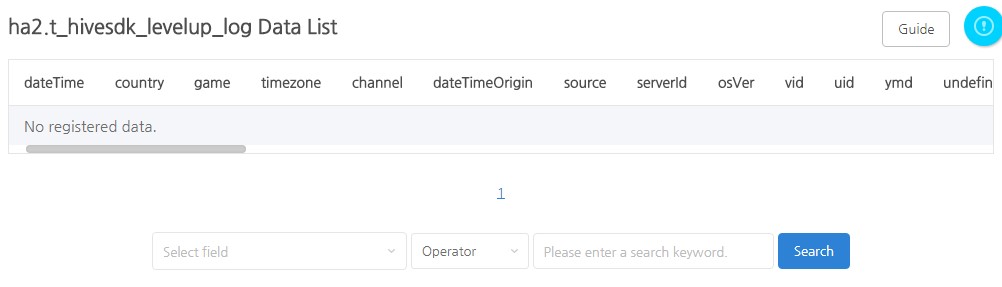

- Data Sample

- Data Sample: You can check the log that’s actuallycollected with the corresponding definition. Up to 10 logs will be exposed from the latest to the oldest.

- Check latest Data waiting to be saved: You can expose the latest log by refreshing.

- View More: You can view more pages to be able to check more data samples. (Below image)

- You can check logs of the last 7 days on the data sample view more page and it’s a feature where you can search for fields. You can check all fields by scrolling left and right.

- Select Field: You can select all fields and search with the selected field’s value.

- Comparison Method: Supports “=”, which is an equivalent search and “Like” search method.

- If you want to search for a specific vid’s log, select the vid field, enter the vid and only the corresponding vid’s log will be displayed.

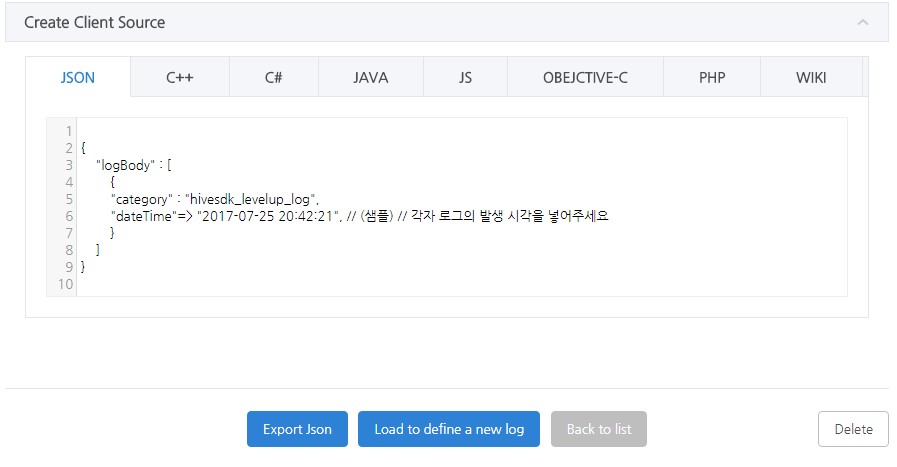

- Creating Client Source

- Create Client Source: Creates a client source of the corresponding log per language.

- Export Json: Exports the corresponding log to Json. The source will be exported on a new window and you’ll be able to use or modify the exported source.

- Load to define a new log: Click this button to define a log that’s similar to the corresponding log, and a new log definition screen with logs already defined will be displayed.

- Delete: Deletes the log definition of the corresponding log.

Send Defined Logs to Server-to-Server

Though Hive Analytics v2 recommends you to transfer the log through the API within Hive SDK v4 as described in “6. Creating Client Source”; you can also send the log to Analytics v2 through server-to-server.

“Fluentd” will be used to send logs. Please refer to the link below on how to use it.

Hive Analytics structure : Fluentd Type

Log Definition Server-to-Server Transmission : Using Fluentd

Log Data Verification and Modification Process

- In addition to the information provided when defining the log, additional data verification and modification processing are as follows

- geoipCountry Addition

- If the clientIp field is present, it is used to perform a MaxMind DB search to match a country.

- If clientIp is not available or the search doesn’t succeed,

- the value from the lang field is used

-

Some of the country values in lang are changed and added.

Before calibration After calibration ko KR ja JP vi VN es ES

- If it’s ultimately impossible to confirm the country value, “ET” is added to geoIpCountry.

- clientIp Calibration

- class 4 data is excluded from recording.

- 예) 127.0.0.1 → 127.0.0.0

- class 4 data is excluded from recording.

- Final Data Calibration Using appId

- An empty value is added by searching by appId based on the registered data in the App Center data

- market

- os

- company

- channel

- If the channel field is missing, “C2S” is added

- sdkVer

- appidGroup

- appidCompany

- An empty value is added by searching by appId based on the registered data in the App Center data